During the last bhyve weekly call, Michael Dexter asked me to run the bhyve CPU Allocation Test that he wrote in order to see if number of CPUs in the guest makes the system boot longer.

Here’s a post with the details of the test and my findings.

The host machines runs the following

# uname -a

FreeBSD genomic.abi.am 13.2-RELEASE FreeBSD 13.2-RELEASE releng/13.2-n254617-525ecfdad597 GENERIC amd64

# sysctl hw.model hw.ncpu

hw.model: AMD EPYC 7702 64-Core Processor

hw.ncpu: 256

# dmidecode -t processor | grep 'Socket Designation'

Socket Designation: CPU1

Socket Designation: CPU2

# sysctl hw.physmem hw.realmem hw.usermem

hw.physmem: 2185602236416

hw.realmem: 2200361238528

hw.usermem: 2091107983360

Basically, it’s FreeBSD 13.2, with 2TB of RAM, 2 CPUs with 64 cores each, 2 threads each, totaling 256 vCores

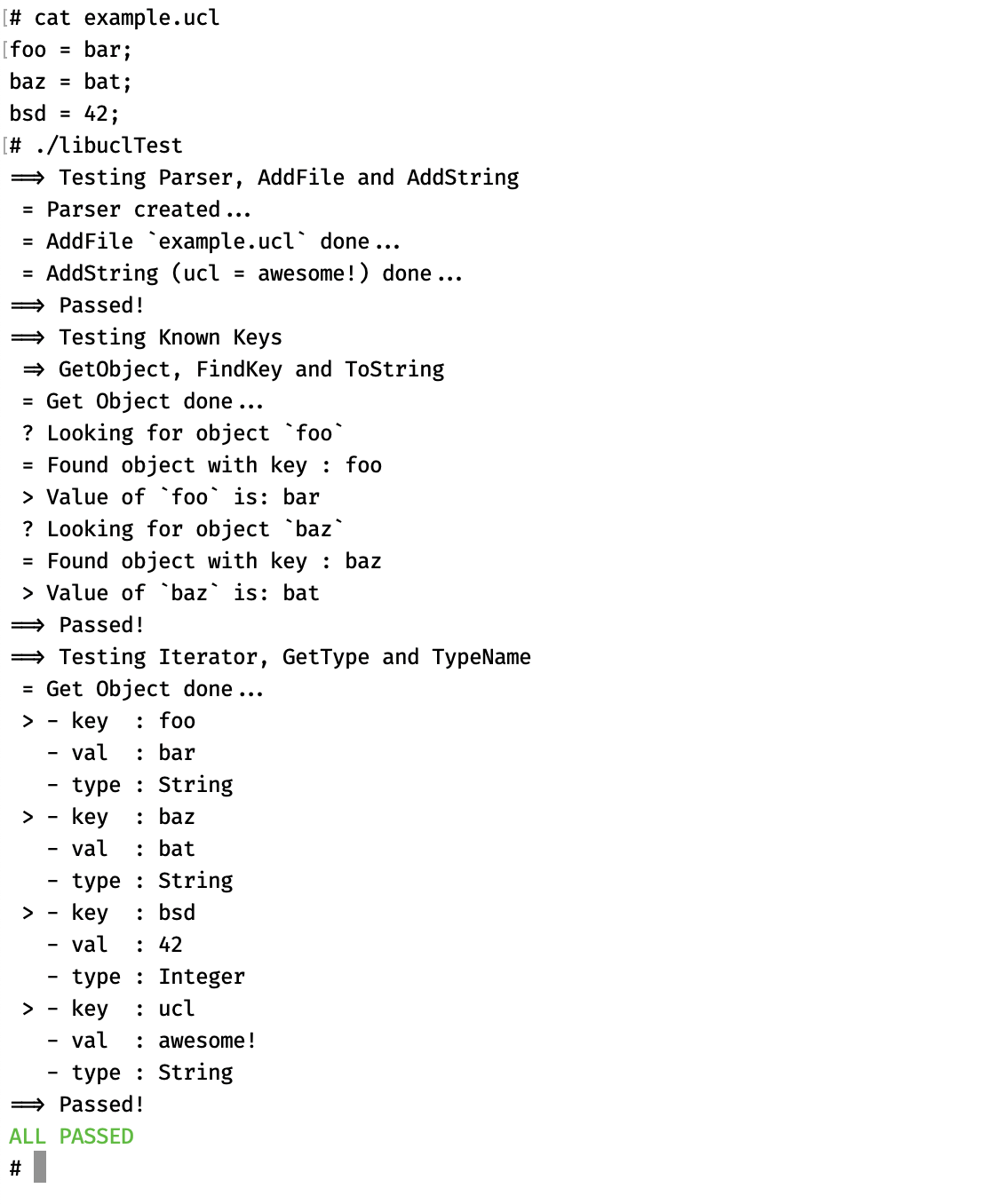

The test runs a bhyve VM with minimal FreeBSD, that’s built with OccamBSD. The main changes are the following:

/boot/loader.conf has the line autoboot_delay="0"- There are no service enabled

/etc/rc.local has the line shutdown -p now

The machine boots and then it shuts down.

Here’s what I’ve got in the log file →

Host CPUs: 256

1 booted in 9 seconds

2 booted in 9 seconds

3 booted in 9 seconds

4 booted in 9 seconds

5 booted in 9 seconds

6 booted in 9 seconds

7 booted in 9 seconds

8 booted in 9 seconds

9 booted in 10 seconds

10 booted in 10 seconds

11 booted in 10 seconds

12 booted in 11 seconds

13 booted in 10 seconds

14 booted in 11 seconds

15 booted in 12 seconds

16 booted in 9 seconds

17 booted in 12 seconds

18 booted in 18 seconds

19 booted in 14 seconds

20 booted in 15 seconds

21 booted in 22 seconds

22 booted in 17 seconds

23 booted in 23 seconds

24 booted in 10 seconds

25 booted in 10 seconds

26 booted in 17 seconds

27 booted in 14 seconds

28 booted in 15 seconds

29 booted in 12 seconds

30 booted in 15 seconds

31 booted in 31 seconds

32 booted in 19 seconds

33 booted in 15 seconds

34 booted in 32 seconds

35 booted in 18 seconds

36 booted in 22 seconds

37 booted in 24 seconds

38 booted in 17 seconds

39 booted in 24 seconds

40 booted in 13 seconds

41 booted in 15 seconds

42 booted in 23 seconds

43 booted in 37 seconds

44 booted in 21 seconds

45 booted in 19 seconds

46 booted in 12 seconds

47 booted in 17 seconds

48 booted in 19 seconds

49 booted in 17 seconds

50 booted in 18 seconds

51 booted in 15 seconds

52 booted in 20 seconds

53 booted in 14 seconds

54 booted in 22 seconds

55 booted in 18 seconds

56 booted in 17 seconds

57 booted in 92 seconds

58 booted in 15 seconds

59 booted in 15 seconds

60 booted in 17 seconds

61 booted in 16 seconds

62 booted in 22 seconds

63 booted in 17 seconds

64 booted in 12 seconds

65 booted in 17 seconds

At the 66th core, bhyve crashes, with the following line

Booting the VM with 66 vCPUs

Assertion failed: (curaddr - startaddr < SMBIOS_MAX_LENGTH), function smbios_build, file /usr/src/usr.sbin/bhyve/smbiostbl.c, line 936.

Abort trap (core dumped)

At this point, bhyve crashes with every ncpu+1, so I had to stop the loop from running.

I had to look into the topology of the CPUs, which FreeBSD can report using

sysctl -n kern.sched.topology_spec

<groups>

<group level="1" cache-level="0">

<cpu count="256" mask="ffffffffffffffff,ffffffffffffffff,ffffffffffffffff,ffffffffffffffff">0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36, 37, 38, 39, 40, 41, 42, 43, 44, 45, 46, 47, 48, 49, 50, 51, 52, 53, 54, 55, 56, 57, 58, 59, 60, 61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74, 75, 76, 77, 78, 79, 80, 81, 82, 83, 84, 85, 86, 87, 88, 89, 90, 91, 92, 93, 94, 95, 96, 97, 98, 99, 100, 101, 102, 103, 104, 105, 106, 107, 108, 109, 110, 111, 112, 113, 114, 115, 116, 117, 118, 119, 120, 121, 122, 123, 124, 125, 126, 127, 128, 129, 130, 131, 132, 133, 134, 135, 136, 137, 138, 139, 140, 141, 142, 143, 144, 145, 146, 147, 148, 149, 150, 151, 152, 153, 154, 155, 156, 157, 158, 159, 160, 161, 162, 163, 164, 165, 166, 167, 168, 169, 170, 171, 172, 173, 174, 175, 176, 177, 178, 179, 180, 181, 182, 183, 184, 185, 186, 187, 188, 189, 190, 191, 192, 193, 194, 195, 196, 197, 198, 199, 200, 201, 202, 203, 204, 205, 206, 207, 208, 209, 210, 211, 212, 213, 214, 215, 216, 217, 218, 219, 220, 221, 222, 223, 224, 225, 226, 227, 228, 229, 230, 231, 232, 233, 234, 235, 236, 237, 238, 239, 240, 241, 242, 243, 244, 245, 246, 247, 248, 249, 250, 251, 252, 253, 254, 255</cpu>

<children>

<group level="2" cache-level="0">

[...]

</group>

</children>

</group>

</groups>

You can find the whole output here: kern.sched.topology_spec.xml.txt

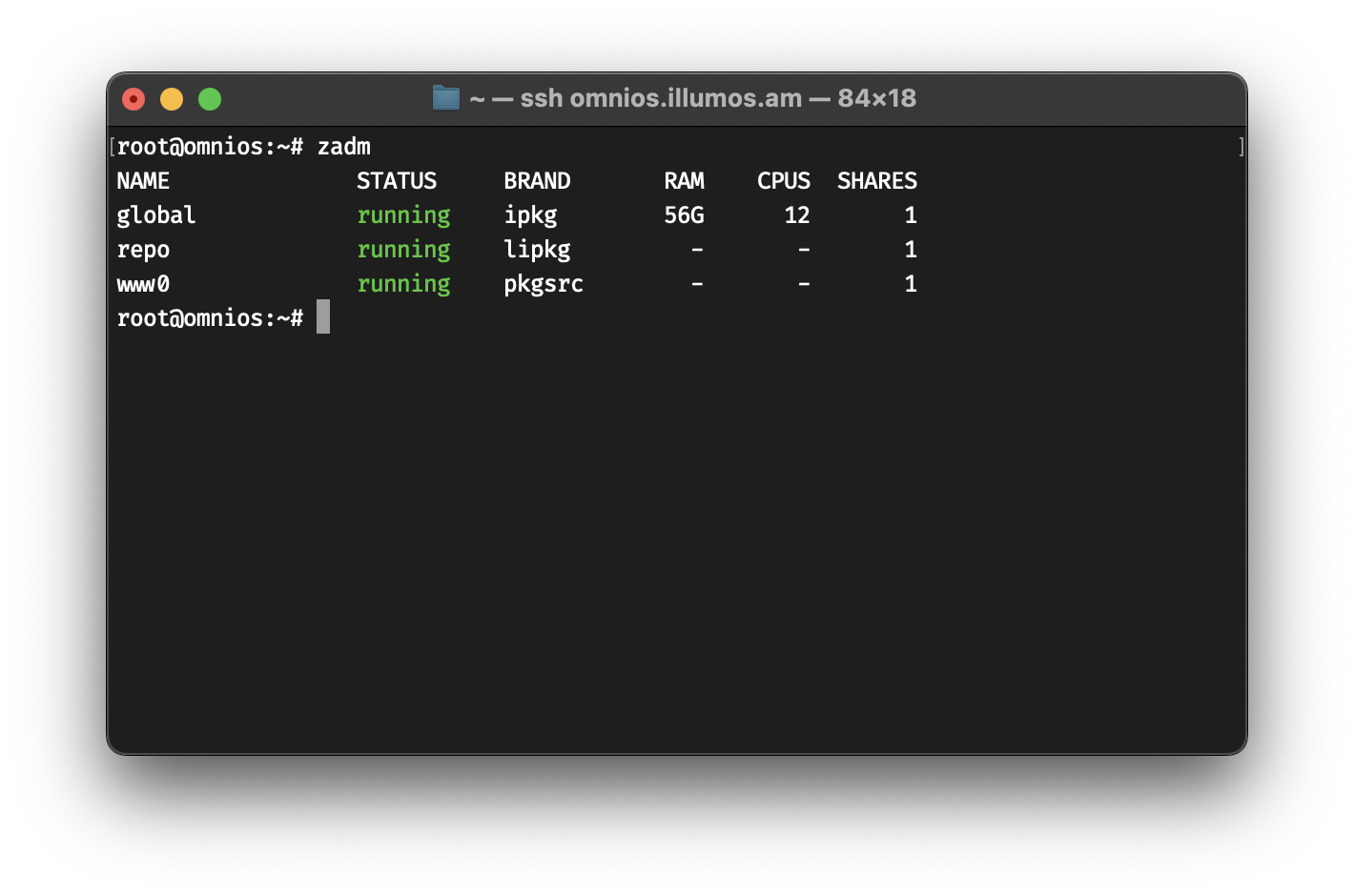

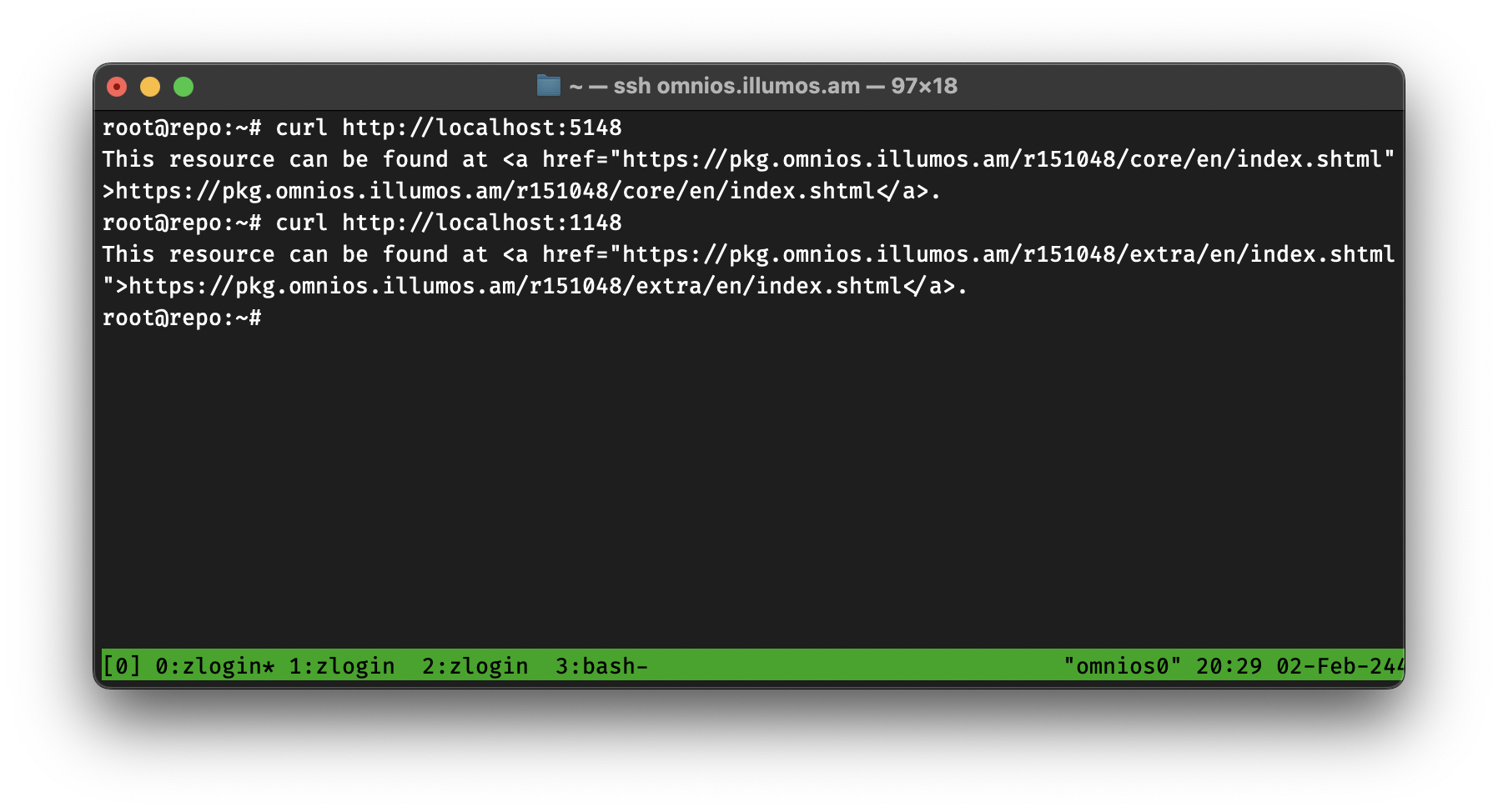

The system that we need for production requires 240 vCores. This topology gave me the idea to run that manually, using the socket, cores and threads options →

bhyve -c 240,sockets=2,cores=60,threads=2 -m 1024 -H -A \

-l com1,stdio \

-l bootrom,BHYVE_UEFI.fd \

-s 0,hostbridge \

-s 2,virtio-blk,vm.raw \

-s 31,lpc \

vm0

And it booted all fine! 🙂

240 booted in 33 seconds

For production, however, I use vm-bhyve, so I’ve added the following to my configuration →

cpu="240"

cpu_sockets="2"

cpu_cores="60"

cpu_threads="2"

memory="1856G"

And yes, for those who are wondering, bhyve can virtualize 1.8T of vDRAM all fine 🙂

For my debugging nerds, I’ve also uploaded the bhyve.core file to my server, you may get it at bhyve-cpu-allocation–256.tgz

As long as this is helpful for someone out there, I’ll be happy. Sometimes I forget that not everyone runs massive clusters like we do.

That’s all folks…